Introduction

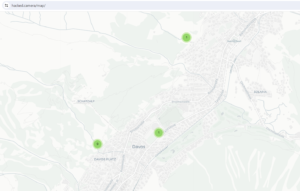

The world has witnessed countless protests throughout history, as people express their grievances and demand change. Paris, known for its passionate demonstrations, has experienced its fair share of protests in recent times. Other places such as Barcelona (September 2022) and USA (Black Lives Matter 2020) has suffered looting and even deaths while protests. However, a concerning new trend has emerged, where protesters take advantage of the chaos to break into stores, including prominent ones like Apple Store, Orange, Supermarkets, and a Motorcycle shop among others. While the immediate impact of such actions is evident, there is a lesser-known risk that these protesters could exploit the opportunity to install Red Team hardware and compromise the cybersecurity of these companies. In this post, we explore this potential threat and shed light on the implications it poses.

Understanding Red Team Hardware

Before delving into the potential cybersecurity risks posed by protesters, it’s crucial to understand what Red Team hardware entails. Red Team hardware refers to devices or equipment used to simulate cyberattacks, often employed by ethical hackers or security professionals to evaluate the security posture of an organization. These tools aim to identify vulnerabilities and assess the effectiveness of security measures in place.

The Protester’s Advantage

During large-scale protests, chaos often ensues, leading to vandalism, looting, and destruction of property. In the case of high-profile stores such as the Apple Store or Orange, these incidents attract widespread attention. Amidst the pandemonium, protesters who possess knowledge about Red Team hardware might exploit the opportunity to install such devices within the compromised stores.

Installation of Red Team Hardware

Protesters who gain access to these stores can potentially plant Red Team hardware, ranging from small devices to sophisticated equipment, within the store’s infrastructure. These devices may go undetected initially, as the focus of security teams and law enforcement is primarily on controlling the protests and minimizing damage. The installed hardware can serve as an entry point for cybercriminals to gain unauthorized access to the store’s network, compromising the cybersecurity of the company and potentially accessing sensitive customer data.

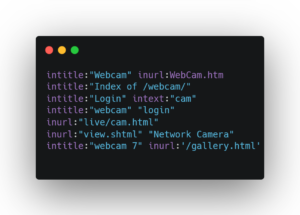

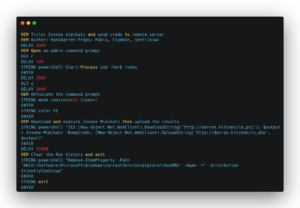

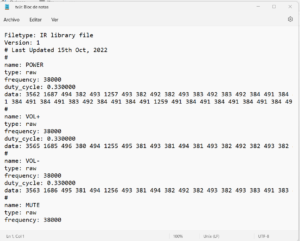

Let’s explore different attack vectors that an attacker could use (I used these devices and more in my Red Team engagements):

- Search for passwords in the store: post-it, router and access points contain stickers with the password.

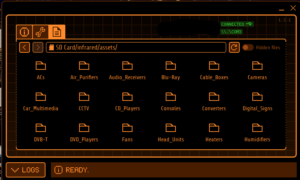

- Install a dropbox: a Red Team dropbox is a tiny computer such as a Raspberry PI deployed and hidden to launch network and wireless attacks. I have talked about dropboxes at different conferences.BSidesSF 2019

Mundo Hacker Day 2021

- Bash Bunny: from pentesting vendor Hak5, the Bash Bunny is a multi-vector USB attack platform.

- EvilCrow Cable: is a BadUSB cable.

- EvilCrow Keylogger: USB keylogger with WIFI support for data exfiltration.

- Jack Shark: Hak5 device for network attacks, plug in to an Ethernet port.

/>

/>

- Key Croc: Another Hak5 gadget for keylogging.

- Lan Turtle: Hak5 covert USB Ethernet adapter for network attacks.

- Packet Squirrel: A man-in-the-middle network sniffer by Hak5.

- Rubber Ducky: the mighty USB keystroke injection attack platform by Hak5.

- WHID Cactus: USB HID injector with WIFI support.

- WIFI Pineapple: The powerful wireless attack platform by Hak5. The Nano (left) and the Mark VII (right).

Keep in mind these devices are just some options, many more exist with all kinds of capabilities.

Cybersecurity Implications

The consequences of compromised cybersecurity are severe, not only for the affected companies but also for their customers and business partners. Here are a few potential implications:

Data Breach: A successful infiltration can lead to unauthorized access to customer data, including personal and financial information. This breach can have far-reaching consequences, such as identity theft, fraud, and reputational damage to the affected companies.

Intellectual Property Theft: Companies like Apple often possess valuable intellectual property that is highly sought after by competitors or malicious actors. Breaching their cybersecurity can expose trade secrets, patents, and proprietary information, jeopardizing their competitive edge and potentially leading to financial losses.

Customer Trust: A data breach or compromised cybersecurity can erode customer trust in the affected companies. Customers may hesitate to share their information or engage in business transactions, leading to a loss of revenue and long-term damage to the company’s reputation.

Supply Chain Vulnerabilities: If a compromised company is part of a larger supply chain, the cyber attack can extend its reach to other organizations connected to the network. This ripple effect can further amplify the impact of the initial breach, potentially disrupting entire industries and causing significant economic damage.

Preventive Measures and Mitigation Strategies

To mitigate the risks highlighted above, it is imperative for companies to prioritize their cybersecurity efforts. Here are some recommended preventive measures:

Robust Physical Security: Strengthening physical security measures, including improved surveillance, alarms, and reinforced entry points, can help deter unauthorized access and limit opportunities for protesters to install Red Team hardware.

Network Monitoring: Implementing advanced network monitoring tools can aid in the detection of any suspicious activities or unauthorized access attempts, enabling a swift response to potential threats.

Regular Security Audits: Conducting regular security audits and vulnerability assessments can identify weaknesses in the system and help implement necessary safeguards.

Employee Awareness: Educating employees about the risks of social engineering and physical tampering can help them identify and report suspicious behavior promptly.

Conclusion

While protests serve as an important avenue for expressing grievances, the potential exploitation of such events to compromise cybersecurity is a growing concern. The recent incidents of protesters breaking into stores like Apple Store and Orange in Paris highlight the need for heightened security measures to mitigate the risks posed by Red Team hardware installation. Companies must remain vigilant, continuously enhance their cybersecurity practices, and collaborate with law enforcement agencies to prevent unauthorized access and safeguard sensitive data. By doing so, they can maintain the trust of their customers and protect themselves from potential cyber threats, ultimately ensuring the long-term sustainability of their businesses.

Thoughts? Would you like to learn more about Red Team devices? Let me know in the comments.

@simonroses